Introduction

Many folks find the idea of program evaluation to be pretty intimidating, especially in settings where time, staffing, and financial resources are often limited. However, it’s our hope throughout this resource that we can help animal sanctuaries realize that evaluation is not only practical and possible, but critical to ensuring their educational programming operates as effectively and efficiently as possible. Whether you’re in the beginning stages of designing an education program, have just implemented a new program, or have been facilitating one at your sanctuary for quite some time now, evaluation is an important ongoing tool for you!

What is Program Evaluation?

Evaluation is a systematic method of collecting and analyzing information about a program’s characteristics, activities, and outcomes in order to make judgments about the program, improve program effectiveness, and/or inform decisions about future program development.

– Program Performance and Evaluation Office of the Centers for Disease Control and Prevention

Program evaluations can help you answer the following questions:

- How is the program being facilitated?

- What is the program impacting?

- What is working well in the program?

- Where can we improve?

A Brief Overview of Program Evaluation in Humane Education

Humane education isn’t the only pedagogical approach sanctuary education programs can utilize to advocate for kindness towards animals, but it is a very prominent one. Despite a deluge of important anecdotal evidence supporting the long-term impact of sanctuary education as well as academic research citing the importance of developing humane education values like empathy, unfortunately a great deal of empirical research demonstrating the efficacy and long-term impacts of such programs remains to be seen. This lack of research matters significantly for both internal and external organizational stakeholders such as program directors, staff, partners, and participants, board members, granting agencies and other funders, as well as local community members and policymakers simply because it limits their ability to distinguish successful and unsuccessful programs and inhibits effective utilization of resources and program improvement.

Why is Education Program Evaluation Important?

Education program evaluation can offer a plethora of benefits and necessary information for both internal and external sanctuary stakeholders. Here is a non-exhaustive list of some to consider:

Program Efficiency

Evaluation informs your programmatic practices and allows you to figure out what works and what doesn’t work related to things like staff/volunteer recruitment, training, skills, performance, and support, participant enrollment, gains, and satisfaction, financial and material resource utilization, frequency and duration of instruction, topics covered, and more. Knowing what works and what doesn’t work helps ensure that you are utilizing all your resources in the most beneficial way. It also allows you to improve and strengthen the delivery and performance of your programs as it provides internal stakeholders like staff and volunteers with opportunities to discuss the challenges they face and come up with solutions.

Program Effectiveness

Program evaluation allows you to measure the various outcomes and impacts of your program and helps to ensure that you are reaching the goals you set out to achieve, which could include increases in participant knowledge, organizational awareness, volunteer involvement, sanctuary support, and community engagement, long-term participant and community attitudinal and behavioral changes towards animals and your organization, and much more.

Program Support

Program evaluation can provide credible evidence of effectiveness that can encourage sustained support from and/or be required by various organizational stakeholders, such as program directors, board members, granting agencies and other funders, community members, school officials, parents, teachers, program staff and partners, university administrators, state-level politicians and policymakers, the Department of Education, and more!

MissionThe stated goals and activities of an organization. An animal sanctuary’s mission is commonly focused on objectives such as animal rescue and public advocacy. Alignment

Program evaluation allows you to determine whether your educational programming is in alignment with your organization’s mission. This is important because it can provide valuable information on how resources are being utilized and show stakeholders that are you working within the organization’s broader goals, which also helps ensure their continued support and funding.

Knowledge Share and Field Advancement

Program evaluation is an incredibly valuable tool to help further important research efforts regarding the efficacy of sanctuary education and humane education more generally. While most folks in these fields intuitively understand the impact and worth of their education programs, more empirical assessment and documentation is greatly needed. In addition to general field advancement, your evaluations can also provide valuable models and relevant information for other animal sanctuaries, rescues, schools, and community organizations that facilitate similar programs. Sharing your experiences can help others ensure they avoid mistakes and replicate successful programmatic strategies! If you are interested in reviewing some of the existing literature and research on the design, implementation, and outcome of various humane education initiatives, check out this and this.

Continuous Learning

Program evaluation allows for ongoing reflection and helps you create an organization that continuously evolves and moves towards a brighter future.

Preparing and Planning for Program Evaluation

Put a Team Together

Effective program evaluation begins with good planning and good planning begins with a good team to assist you in the process! You can start by consulting with anyone you think has a vested interest in your education program. This might include program directors, staff, and partners, board members, granting agencies and other potential funders, school officials, university administrators, community policymakers, and more. It’s important to choose team members who will communicate clearly, remain committed throughout the entire process, contribute to the credibility of your evaluation, add technical guidance, and/or have influence over your program’s operations and outcomes. Check out this evaluation guide for more help on determining which organizational stakeholders would make the most sense to include in your education program evaluation.

Reflect on your Current Education Program

After you’ve assembled a good team together, it’s important to collectively reflect on your sanctuary’s current education program by closely examining the goals and objectives you originally set out to accomplish. If you’re at the very beginning stages of designing an education program or are completely re-evaluating your program because your original goals and objectives weren’t super clear or relevant, please check out this resource, which outlines the steps needed to successfully plan a quality education program. It also includes some of the questions you’ll want to consider as a team as you assess the current state of your sanctuary and how that impacts your education program and its goals. Now, let’s take a closer look at how to identify and develop educational goals so that they fit your program and organization’s mission appropriately. In time, this will help you determine the most appropriate type of evaluation to conduct and the most relevant evaluation questions to ask.

In terms of education program design, goals and objectives are not the same! According to the Academy of Prosocial Learning, a goal is a broad statement that says what a program plans to achieve overall and serves as a foundation to program objectives. For example, many animal sanctuaries facilitate educational programs in order to develop empathy for farmed animals in sanctuary visitors. But how do they actually do that? How do they measure empathy? This is where objectives come in very handy. Objectives are the steps a program takes to achieve a particular goal. In other words, objectives help you frame your bigger goals into actionable things that can be measured and subsequently evaluated.

One way to help break your goals down into manageable objectives is by ensuring that they are SMART: specific, measurable, attainable, relevant, and time-bound. A lot of folks find it helpful to make them time-bound by categorizing them into short-term (days/weeks/months), mid-term (months/years), and long-term objectives (months/years). This will also help you group them into categories that can then be measured and evaluated. Examples of objective categories that can be measured and evaluated include:

- Change in knowledge: participants will increase their knowledge of farmed animalsA species or specific breed of animal that is raised by humans for the use of their bodies or what comes from their bodies. and the issues facing them, sanctuaries, etc.

- Change in attitudes: participants will score higher on empathy assessments after the program’s completion, participant perceptions of farmed animals will change after the program, participants will have a more positive view of sanctuary professions and will be more likely to consider sanctuary careers, participants will see sanctuary work as more important after the program, etc.

- Change in behaviors/actions: participants will be more likely to take action to support farmed animals after the program (e.g., there will be an increase in veganismA movement and way of living that seeks to eliminate the exploitation of and cruelty to nonhuman animals as much as possible. Often, veganism is defined synonymously with a plant-based diet, although veganism includes abstaining from elements of animal exploitation in non-food instances when possible and practicable as well., volunteers, donations, fosters, adoptions, visits after the program…), etc.

- Creation of participant “products”: participants will be able to create “products” that demonstrate multiple levels of learning and knowledge (e.g., final projects, informational fliers, brochures, and posters, presentations, books, artwork, videos, etc.)

- Views on a sanctuary education program: participants will be satisfied with the program, participants will like different aspects of the program, etc.

Goals & Objectives Hypothetical Example

Feeling like a lot? Let’s take a look at a hypothetical example of a sanctuary education program at an imaginary organization called “Chicks for Change Urban ChickenThe raising of chickens primarily for the consumption of their eggs and/or flesh, typically in a non-agricultural environment. Rescue”:

Chicks for Change Urban Chicken Rescue offers month-long sanctuary education programs focused on farmed bird species like chickens and turkeysUnless explicitly mentioned, we are referring to domesticated turkey breeds, not wild turkeys, who may have unique needs not covered by this resource.. Once a week for four weeks, they welcome 15 participants to their location for a two-hour workshop on a different topic related to farmed birds and the issues they face.

Program Goal: To develop empathy for farmed birds in folks who participate in the program

Short-term Objective: Participants will increase their knowledge of farmed birds and the issues facing them. This objective is SMART:

- Specific: 15 participants, 4 two-hour workshops

- Measurable: Participant “products” (e.g., posters, fliers, videos, artwork, essays, presentations, etc.) created at the end of each workshop session can identify potential increases in knowledge of farmed birds and the issues facing them. This could also be a mid-term objective with a pre-test and post-test taken immediately after the program’s completion, which could also identify potential increases in knowledge of farmed birds and the issues facing them.

- Attainable: yes

- Relevant: yes

- Time-bound: by the end of each workshop

Mid-term Objective: Participants will score higher on empathy assessments after the program’s completion. This objective is SMART:

- Specific: 15 participants, 4 two-hour workshops

- Measurable: A pre-test and post-test taken immediately after the program’s completion can identify potential increases in empathy towards farmed birds.

- Attainable: yes

- Relevant: yes

- Time-bound: four weeks

Long-term Objective: Participants will be more likely to take action to support farmed birds after the program’s completion (e.g., increase in sanctuary volunteers and donations, veganism, etc.). This objective is SMART:

- Specific: 15 participants, 4 two-hour workshops

- Measurable: A pre-test and post-test taken 6+ months after the program’s completion can identify potential increases in volunteers, donations, veganism, etc.

- Attainable: yes

- Relevant: yes

- Time-bound: 6+ months

Reflective Exercise

What overall goals does your education program hope to accomplish? What steps (objectives) does your program take to work towards that goal? Are they SMART? Can you group them into categories that can be measured and evaluated?

Audit your Resources

One of the most pressing questions for the sanctuary education and humane education fields is whether they have a long-lasting impact. Few previously conducted evaluations measure effects beyond six months post-program simply because properly conducting long-term research often requires a lot of time and resources. However, it’s important to remember that the more evidence we collect, the easier it will be to validate and disseminate knowledge and create conditions that support further evaluation processes and more impactful sanctuary education programs! It also allows us to streamline our resources and focus only on what works. With that said, time, financial, and human resources aren’t always in overabundance in sanctuary settings. Thorough program evaluation, which can include formative, process, and outcome evaluation, pretests, post-tests, controls, and involvement from multiple disciplines and methodologies, typically costs about 10% to 20% of an overall program budget.

As such, it’s important to take stock of your sanctuary’s current resources (e.g., staffing, volunteers, space, materials, funds, time, etc.) prior to implementing an evaluation. Auditing your resources will help you understand your limitations and set reasonable expectations for what you have available to invest in a program evaluation. It can also be helpful to consider collaborating with community members outside of your sanctuary, such as volunteers and colleges or universities, who might be able to offer more funding opportunities and expertise in evaluation processes. If you decide to rely on your own program staff to conduct evaluations, remember to train them and support them properly and incorporate their evaluative activities into their other ongoing program management activities so they don’t feel overwhelmed.

Many folks find it helpful to create a visual aid demonstrating what kinds of resources they have and where they’d like/are able to allocate them in the evaluation process. These visuals can also be immensely helpful after the evaluation process because you can see where resources were utilized (i.e. specific program activities) and how (i.e. effectively or not – did they help you accomplish what you set out to do?).

Animals as Self-Determining Teaching Partners

Research has shown that humane education programs with and without interactions with nonhuman animals can be effective. So, please consider what is most appropriate based on your sanctuary’s philosophy, residents, program objectives, staff, and space. Your residents should maintain the right to decide whether they want to participate in your education program. If residents show interest in participating in your program, it is critically important to ensure the mental, emotional, and physical safety of both nonhuman and human program participants at all times. Neglecting to do this greatly increases the chances for negative impact on all participants, as well as your program’s overall effectiveness. Please see this resource for more information on this topic.

Consider Existing Education Models for Evaluation Design

Make sure you do a thorough exploration of existing research on education programs that are similar to yours. This and this can help you find out what others have discovered in the process of designing, implementing, and evaluating similar programs, which will inform your own process. You might be able to build on evaluations that have already been conducted. You’ll also be more informed about the topics you’re focusing on in your program and better equipped with knowledge regarding what works, what doesn’t work, and what questions still need to be answered!

Designing your Program Evaluation

So, you’ve gathered your team, reflected on your current program, audited your sanctuary’s resources, and done your research on similar programs. Now what? It’s time to design your evaluation! Contrary to popular belief, program evaluation designs do not have to be complicated. In fact, so long as they will help you effectively determine whether you’re meeting your programmatic goals, you can design your evaluation to be as simple as you’d like!

Different Types of Evaluation

There are many types of program evaluation, but the most commonly used ones typically fall within three broad categories: formative evaluation, process evaluation, and outcome evaluation. Each will help you determine whether you’ve achieved the goals you originally set out to accomplish and show you whether the strategies you employed helped to achieve those goals. Ideally, a very thorough program evaluation would include all three of these. It’s important, however, that you choose the one(s) that makes most sense for your program, your staff, your stakeholders, your time, and your budget.

A Note on Novel Approaches to Program Evaluation

While the types of evaluation outlined and described in this resource are commonly used, we want to acknowledge that it may not be possible or even desirable for all animal sanctuaries to evaluate the progress and impact of their education programs using traditional evaluative tools. There are a plethora of novel approaches to program evaluation that sanctuaries can consider and create, and we strongly encourage all organizations to utilize the evaluative tools that make the most sense for them. Measuring goals looks different across different communities!

Formative Evaluation

Formative evaluations are formal and informal assessment procedures conducted by educators during the learning process (e.g., workshop, lesson, tour, session, etc.) that check-in with the participants’ learning experience. It allows you to obtain feedback and modify your teaching, materials, and learning activities in real time so that you can improve the learning experience and delivery of your program as you go! Formative evaluation is valuable to conduct no matter what phase of your program you’re in and should ideally be done multiple times if possible.

What formative evaluation shows: Formative evaluation shows participants are performing during the workshops/lesson plans/tours, levels of participant proficiency, how well participants understand the material being covered, what participants are learning or not learning, where participants are struggling, participant progress, participant thoughts and feelings about how they are progressing and what they like or dislike about a particular assignment, etc.

When to use formative evaluation: This is a great tool to use during any phase of your program, but especially prior to (e.g., pilot program) or in the very beginning of program implementation. Formative evaluation can ensure that a program or program activity is feasible, appropriate, and acceptable before it is fully implemented.

Why formative evaluation is useful:

- Allows educators to monitor and update instructional approaches in real time

- Shows whether modifications need to be made to learning materials, activities, or assignments to match the educators’ and participants’ needs

- Allows educators to identify misconceptions, struggles, and learning gaps and address them along the way

- Gives educators a quick look at how effective or not a certain work plan is

- Improves teaching and learning simultaneously

Examples of formative evaluation:

- Informal notes that record anecdotal evidence of the learning experience through educator observations, interviews, or focus groups

- Informal worksheets and pop quizzes to see what participants have understood

- Written assignments asking participants to write down all they know about a particular topic so that educators can discover what participants already know about the topic they are intending to teach

- In-class discussions

- Small group feedback sessions

- Clicker questions

- Homework assignments and final projects

- Written and oral surveys in the middle or end of a lesson to ask participants how they are feeling about the program and responding to the material

- Participants can draw a concept map during the learning process to represent their understanding of a topic

- Written assignments asking participants to submit one or two sentences identifying the main point of a lesson or workshop

- “Traffic cards” participants can use to indicate the level at which they are understanding a concept during a lesson. Green means that the participant is understanding the concept and the educator can move on, yellow indicates that the instructor should slow down because the participant is only somewhat understanding the concept, and red indicates that the participant wishes that the educator stop and explain a specific concept more clearly because they are not understanding it

- Written assignments at the end of a lesson asking participants to name one important thing they learned in the workshop

Process Evaluation

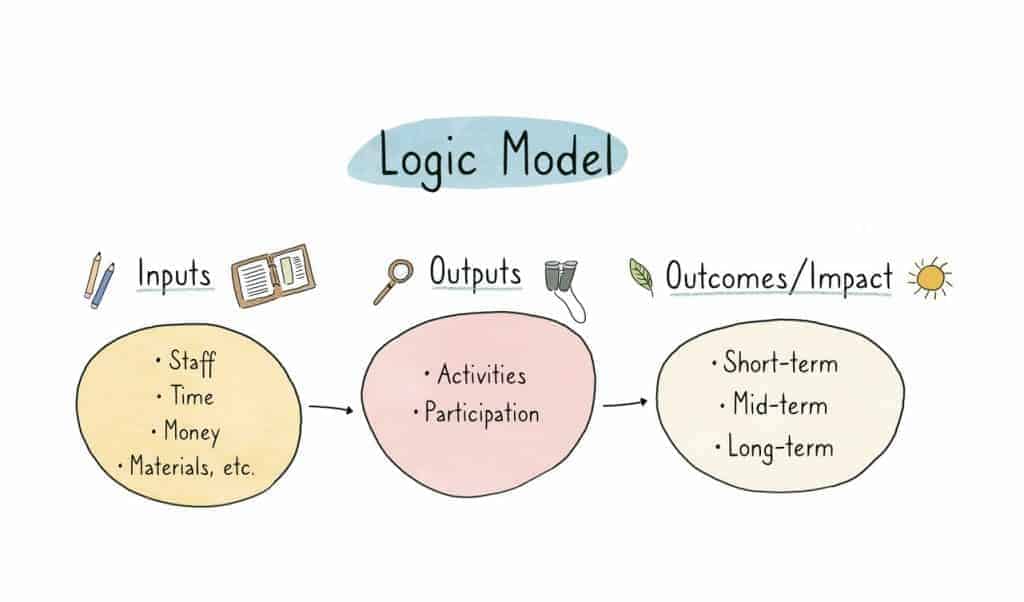

Process evaluation is the process of collecting, analyzing, and using information to assess the mechanics (e.g., resources and delivery) of your program in order to determine the extent to which your program is operating as intended and being delivered in the best possible manner. It focuses on program inputs (e.g., time, human, and financial resources) and outputs (learning activities, instructional delivery, participation) and allows you to determine whether your inputs have resulted in certain outputs. Gathering this information also helps ensure that you reach your intended outcome/impact! Or, at the very least, it helps you understand why you did not reach your intended outcome/impact. Process evaluation is most valuable to conduct during the implementation phase of your program.

What process evaluation shows: Process evaluation shows whether you are delivering the information you originally set out to deliver and within the set timeframe, whether the time spent on program activities is appropriate, whether the resources you need to properly facilitate your program are being utilized, whether there are enough resources (financial, time, staffing, materials) to properly facilitate your program, whether the workshop/lesson materials are accessible and developmentally appropriate for your audience, whether the material is being properly and consistently presented from session to session or instructor to instructor, whether facilitators are being trained and supported appropriately, whether the lesson plans include relevant information for the facilitators and assist them in proper teaching of the program, whether the program assessments appropriately measure the information you’re trying to obtain from participants, whether participants are enjoying your program, whether the target population is being reached, whether the program activities are leading to expected results, problems or unforeseen consequences encountered in delivering the program and the ways they are resolved, whether your program needs to be amended in any way, etc.

Streamline your Process Evaluation

Measuring every detail of program implementation is not feasible or necessary. Therefore, process indicators you choose to evaluate should be developed based on the priorities of your program, your staff, your stakeholders, your time, and your budget.

When to use process evaluation: This is a great tool to use after your program has begun and routinely throughout the implementation phase.

Why process evaluation is useful:

- Helps ensure that your program is being delivered according to design

- Helps you identify the strengths and weaknesses of your program design and delivery methods

- Helps stakeholders understand why a program is successful or not

- Helps stakeholders see how a program outcome or impact was achieved

- Helps stakeholders brainstorm and implement alternative ways of providing program services

- Reveals early challenges and saves you subsequent time and funding

- Helps you allocate and reallocate resources in better ways

- Helps you develop the most appropriate program materials

- Helps you hire, train, and support your staff more effectively

- Allows you to alter program activities and improve the chances of positive outcomes

- Aids in replication in case another organization wants to facilitate a program similar to yours

Examples of process evaluation:

- An implementation checklist to record adherence (or not) to your original work plan. This could take the form of a logic model*

- Accurate records of participation, attendance, and tracking of program reach among your target population

- Demographic documentation of program participants and staff, as well as the community in which your program is delivered

- Written documentation of time and duration of activities and program sessions

- Systematic observation and notes on what is happening in your program

- Documentation of resources used

- An exit summary that describes the key parts of a workshop/lesson plan/session

- Assessments indicating overall improvement (or not) in the desired area or change in behavior/engagement

- Surveys asking participants whether they were satisfied with the program

- Surveys asking staff whether they were satisfied with the program

*Logic Models

This is where a logic model can come in really handy! A logic model is a visual representation of how a program is expected to work, relating inputs (e.g., time, human, and financial resources, space, materials), outputs (learning activities, instructional delivery, participation), and the intended outcomes or impacts that the program is expected to create as a result of those inputs and outputs. For many folks, it’s an essential tool to refer to during process evaluation because it helps program stakeholders determine the extent to which their program is operating as intended. In more simplified terms, a logic model is a gigantic road map that also serves as a gigantic checklist that allows you to see how the resources you put into your program result in the change your organization is hoping to make!

Visual Aid

For each component in a logic model (inputs, outputs, outcomes), evaluators should develop a number of indicators that are used as measurement guidelines to determine the extent to which they are adhering to their original work plan. Remember, the goal of a process evaluation is to help you determine whether the time, human, and financial resources (inputs) you invested in your program resulted in the effective execution of the intended learning activities, instructional delivery, and participation (outputs). The inputs and outputs you invest in your program are intended to ensure that you reach your intended outcomes: “If we use these resources to do these things, then we should see these results”.

So, let’s say you list a certain amount of money and a certain number of staff members in the inputs section of your own logic model with the hope that this will enable you to deliver your program to your intended audience (output). An indicator to measure the success (or not) of the output for this example would be a simple “yes or no”. After all data on relevant indicators on your logic model have been collected post-program delivery, you can compare your results to your original targets, goals, and indicators and make modifications based on that.

Create your Own Logic Model

Logic models can look different depending on the organization and its program(s). If you’d like to learn more about logic models and create your own, check out this resource for additional information and guidance on how to properly design and implement one into your program evaluation efforts.

Outcome Evaluation

Outcome evaluation is the assessment of the results of your education program. It examines the impact, effectiveness, and relevance of your program and answers the questions: “Is this program making a difference?” and “Did the program succeed in meeting its goals?”.

What outcome evaluation shows: changes in participant attitudes, behaviors, and beliefs, organizational awareness, volunteer involvement, sanctuary support, and community engagement before and after your program, and more!

When to use outcome evaluation: This is a great tool to use after the completion of your education program. However, an effective outcome evaluation usually includes a pre-test, a post-test, and a control group. The post-test should ideally be given at least twice – once immediately after the program and once later down the road (preferably several months to years).

Why outcome evaluation is useful:

- Proves whether what you planned to achieve was actually achieved by providing evidence-based information that is credible, reliable, and useful

- Evidence of positive impact can encourage sustained support from various organizational stakeholders

- Highlights your program’s benefits

- Aids in replication in case another organization wants to facilitate a program similar to yours

Examples of outcome evaluation:

- Pre-tests, post-tests, and control groups measuring changes in specific constructs such as knowledge, attitudes, behaviors, beliefs, volunteer involvement, donations, etc.

- Self-assessment surveys and questionnaires

- Peer/educator logs, surveys and formalized observations

- Interviews and interactive focus groups

- Participant portfolios

Choosing the Right Type of Evaluation

Now that you have a better understanding of the different types of evaluations that are available, you’ll need to decide which one is most appropriate for you at this time. Let’s take a look at some of the next questions you’ll need to answer in order to make the right choice for your program.

Determine the What, the Why, and the Who of Your Program Evaluation

The WHAT

- What are you evaluating?

- What do you want to know at the end of your evaluation? Statistical information like numbers of participants who went veganAn individual that seeks to eliminate the exploitation of and cruelty to nonhuman animals as much as possible, including the abstention from elements of animal exploitation in non-food instances when possible and practicable as well. The term vegan can also be used as an adjective to describe a product, organization, or way of living that seeks to eliminate the exploitation of and cruelty to nonhuman animals as much as possible (e.g., vegan cheese, vegan restaurant, etc.). after your program? Qualitative information like stories? Information about what’s working and what isn’t working?

- How does this relate to your program goals?

The WHY

- Why are you evaluating?

- What is your purpose?

- Is it to strengthen your program?

- Is it to hold yourselves accountable?

- Are you looking for more funding opportunities?

The WHO

- For whom are you evaluating?

- To whom do you need to provide answers to your evaluation question(s) (e.g., staff, administrators, board members, public, granting agencies, publications, etc.)?

Determine What Programmatic Phase You’re In

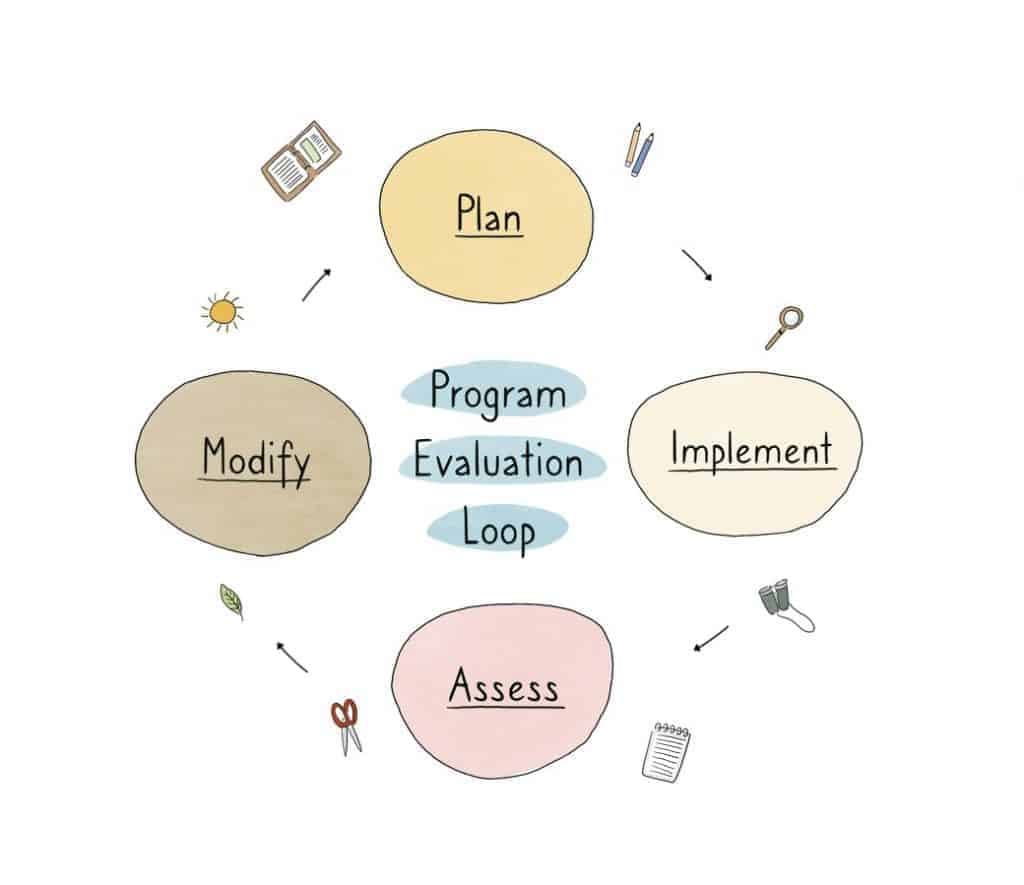

Before you choose an evaluation type, it’s also very important to determine what phase your education program is currently in. Are you in the planning phase of program design? Then, you might want to consider prioritizing formative evaluation. Are you in the implementation phase of program facilitation and need more information about the mechanics of your program? Then, you might want to consider prioritizing process evaluation. Or are you in the assessment and modification phases of your program and ready to measure impact? Then, perhaps you’re ready to conduct an outcome evaluation. Knowing this will help you determine the most appropriate type of evaluation to choose.

Although evaluation is ideally an ongoing tool that you will utilize throughout each phase of your program, you might not have the resources to do that right now. If this is the case, rest assured that proper evaluation at any programmatic phase will lead to incredibly useful information about the effectiveness of your program. Here’s a visual aid to help you determine what phase you’re in:

Determine What Aspect of Your Goal You’re Going to Measure and Identify your Evaluation Questions

Based on all of the information you’ve gathered related to the “what”, “why”, “who”, goals and objectives you outlined in your program planning, and the programmatic phase you’re in, you should now be able to determine what aspect(s) of your program you’re going to measure and identify the evaluation questions you hope to answer. Just like your program objectives, your evaluation questions should be specific and measurable.

Streamline your Process

Remember, it’s impossible to measure everything. So, it might be helpful to start by choosing one of your programmatic goals or objectives that can easily be measured and go from there!

Let’s revisit our hypothetical education program at Chicks for Change Urban Chicken Rescue. Remember that their program’s overall goal is to develop empathy for farmed birds in folks who participate in the program and their short-term objective is for participants to increase their knowledge of farmed birds and the issues facing them. So, the purpose of one of their evaluations might be to see what knowledge is being transferred from educator to participants. Based briefly on this information, here are some potential evaluation questions Chicks for Change could ask:

- Are participants understanding the main ideas of basic farmed bird care? (formative evaluation)

- How can we help participants better understand concepts of appropriate food and shelter for farmed birds? How much time do we spend teaching about food and shelter for farmed birds, for example? (process evaluation)

Reexamine your Resources

You already took stock of your resources during the evaluation preparation stage, but now that you’ve reviewed the various types of evaluation, as well as what aspects of your program you’d like to evaluate, why you’d like to evaluate, and for whom you’re evaluating, the next step is determining if you actually have the means to conduct a specific type of evaluation properly! Depending on the type of evaluation you choose, you will need a specific amount of dedicated staff, funds, materials, time, and space. The question now is, can you reach your evaluation goals with what you currently have? Let’s look at the questions you should consider now as you reexamine your resources closely:

Human Resources

How many program facilitators will you need? How many evaluators will you need? Volunteers? Interns? Do you need to bring someone in with specific expertise (e.g., analysts from local universities or local businesses)? Will you need an administrator to help you organize the information you collect? How many humans can you dedicate to this evaluation?

Financial Resources

How much money do you currently have to invest in this evaluation? Will you need additional funding?

Time

How much time can you dedicate to a proper evaluation? We recommend creating a detailed timeline so you can design an evaluation that is realistic and achievable for you.

Implementing your Evaluation: Methods and Tools for Collecting Data

Methodology

Now that you’ve determined what aspects of your program goals you’re going to measure and identified your evaluation questions, you need a roadmap to implement them into something actionable! In this case, your roadmap is your methodology or how you’re going to collect the information you need to answer your evaluation questions and there are three potential methods to choose from: qualitative, quantitative, and mixed methods. We’ve summarized each of them below, but feel free to check out this resource for more information. After you’ve learned more about each of these, go back to your goals, your “what”, your “why”, and your “who” and decide which one would make most sense for your evaluation.

Qualitative

The researcher collects information through the use of open-ended questions and assignments, such as observations, interviews, focus groups and participant “products” like work samples, words, and anecdotes through the use of open-ended questions.

Quantitative

The researcher removes themselves from inquiry and interpretation by using objective tools like surveys and tests to measure numerical information.

Mixed Methods

This is a combination of qualitative and quantitative data collecting methodologies and often helps researchers collect a more complete illustration of participant progress (i.e. utilizing participant journal reflections in conjunction with numerical test results).

Tools

Your methodology will determine the tools you’ll need to collect your information and there are a variety of tools to choose from! Here’s a non-exhaustive list of data collection tools you might consider using in your sanctuary education program evaluation:

- Rubrics for assessing participant “products” like artwork, essays, journal entries, final projects, presentations, etc.

- Surveys related to demographic knowledge that can help provide a more accurate picture of your participants and control groups

- Surveys related to workshop/lesson/session relevance, ease of administration, delivery and instruction

- Surveys and tests documenting changes in attitudes: IAS (Intermediate Attitude Scale), IECA (Index of Empathy for Children and Adults), PAS (Primary Attitude Scale), Fireman Test, a simple one-question assessment of the changes in attitudes of students towards nonhuman animals, a group of words that participants can choose from to describe their feelings towards nonhuman animals before and after the program

- Surveys and tests documenting changes in behaviors: pre-tests and post-tests containing opportunities to take action at your sanctuary (e.g. scholarships and gift certificates redeemable at your sanctuary for a prize, foster/adoption applications, resident enrichment building, volunteer information, etc.), self-reported feedback of intended behavior change about how many hours participants would be willing to volunteer at the sanctuary per month before and after program completion

- Surveys and tests documenting changes in knowledge: multiple choice tests given before and after program completion where participant responses are analyzed based on dichotomous right or wrong answers

- Surveys and tests documenting changes in volunteer hours

- Surveys and tests documenting changes in donations

- Self-assessment forms

- Observations documenting participant experiences

- Reflective journal statements

- Interviews and focus groups

- “Brown paper tweet boards” to capture immediate participant feedback during workshops and lessons. These can be posted throughout different areas of your sanctuary. Participants can “tweet” their immediate reactions in each area of the sanctuary where there is a “tweet board” using provided writing utensils.

- Portfolios

- And more!

Before deciding on the tools you’ll use, we recommend researching the tools other education programs have implemented during their own evaluation processes. You can search online library databases, read trade periodicals and journals, attend conferences, and more. Evaluation tools that have already been created and validated can help lessen your workload, give you confidence that you are evaluating your program appropriately, and increase the validity of your results. Most tools are freely accessible to anyone who wishes to utilize them. However, some require author-approved permission for outside use. As you explore other program evaluation models, consider reaching out to the authors for verification. If you choose to design your own evaluation tool(s), be mindful that there can be a lot of work in creating reliable content for rubrics, tests, surveys, interviews, and focus groups. We recommend utilizing an existing tool that’s already well-supported as a model or even hiring a consultant with expertise in evaluation design.

A Note on Likert-type Scales and Surveys

Sometimes, Likert-type scales and surveys with broad simple statements as measurements of ethical competencies like empathy are not able to fully illustrate the changes taking place in education program participants. In certain instances, they might even limit or prohibit constructive dialogue, especially around issues related to nonhuman animals. Therefore, it might be helpful to consider using or developing more complex evaluation tools that can more accurately reflect changes in ethical competencies, such as participant projects, portfolios, interviews, and journal reflections. It might also be helpful to focus on analyzing the actions that your participants take to support nonhuman animals after completing your education program. Behavioral assessments can demonstrate changes in actions and attitudes.

The Importance of Utilizing Pre-tests in Conjunction with Post-tests and Control Groups

Evaluation using pre-tests, post-tests, and control groups is able to give a more complete illustration of the impact your program is making on participants. Knowing what participant and non-participant (control group) knowledge, behaviors, and attitudes are like before and after program completion is an important tool not only to reliably measure long-term change, but to adapt learning materials and instruction in a way that best suits your facilitators and participants.

Reflective Exercise: Putting It Together

What methodological approach will your program take and why? What tools will your program utilize? Do you need permission to use them? Are they validated and well-supported?

Evaluation Implementation, Analysis, and Dissemination of your Findings

After you’ve thoughtfully designed your evaluation, it’s time to put it into practice and collect all your data! But what do you do with all that data while you’re collecting it and what do you do with it after you’re done?

Protocols to Follow While You’re Collecting Qualitative Information

- Immediately process the information you collect and write down what you feel is most useable and relevant. You could create a reflection sheet template that includes things like date, time, facilitator, location, etc. and fill it out after each workshop/lesson plan/session so the information stays consistent throughout the entire evaluation process.

- Code your information as you go! Coding is a form of organizing qualitative information into categories of things that stick out to you. Tools like Computer Assisted Qualitative Data Analysis Software can help you with this.

Protocols to Follow After You’re Done Collecting Qualitative Information

- Review your data and organize them into meaningful themes and patterns (e.g., repetition of particular words or phrases, missing information, metaphors and analogies, etc.).

- Look at your information in relation to your original evaluation question(s) and get rid of anything that isn’t meaningful.

- For computer-assisted help in identifying data themes, check out this resource on Theme Identification in Qualitative Research

Protocols to Follow While You’re Collecting Quantitative Information

- Enter the information you collect into a software database like Excel, SAS, or SPSS and organize it.

- Stay consistent and process the answers to each tool you utilize in exactly the same way.

Protocols to Follow After You’re Done Collecting Quantitative Information

- Look at your information in relation to your evaluation question(s) and analyze it using descriptive statistics and/or inferential statistics. Statistics can be tricky. If you are unsure how to analyze your information in this way, we recommend seeking support from someone with expertise in this area.

Summarize the Information and Share Your Findings

Now, with your team, take a close look at all of the relevant information you’ve collected and organized. What important discoveries have you made about your program? Summarize these discoveries and share them with all relevant program stakeholders. Were there any unexpected outcomes? Challenges? Benefits? Successes? It’s important to be honest about your findings so that you can improve upon the next implementation of your education program! Depending on who you’re sharing the information with and how you’re sharing the information (e.g., via social media, handouts, brochures, mailings, conferences, newsletters, journals, etc.), you’ll want to adjust the style and formatting of your findings to be more or less formal. It can also be helpful to create visual representations of your findings to make them more appealing. This could include things like charts, graphs, photographs, and artwork.

Modifications and Follow-Up

Remember that evaluation is an ongoing process and program modification is a very important part of that process! After you’ve looked over your findings and shared them with all relevant organizational stakeholders, it’s important to utilize that information and the feedback you receive to modify and improve your program as needed. What could you do differently during the next implementation phase of your education program that would make it more impactful? Implement these modifications into your program and start the evaluation process over. If used regularly and properly, evaluation can be critical to ensuring your educational programming operates as efficiently and effectively as possible.

We also recommend following up with anyone who was actively involved in the implementation of your program (e.g., participants, school teachers, volunteers, etc.). Follow-up that measures long-term impact is essential and should be incorporated into your evaluation planning and design whenever possible. Sending a thank-you note to external program partners that includes meaningful highlights from the experience and possibly some photos of the group can also go a long way in maintaining a positive relationship with them and sustaining your program.

Sources:

Analyzing Qualitative Data for Evaluation | Centers for Disease Control and Prevention

An Annotated Bibliography of Research Relevant to Humane Education | The National Association for Humane and Environmental Education

Course HE 006 Introduction to Evaluating Humane Education Programs | Academy of Prosocial Learning

Developing a Logic Model or Theory of Change | Community Tool Box

Evaluation Toolkit | The Pell Institute and Pathways to College Network

Formative and Summative Assessments | Yale Poorvu Center for Teaching and Learning

Introduction to Program Evaluation for Public Health Programs: A Self-Study Guide | Centers for Disease Control and Prevention

Process Evaluation | RAND Corporation

The International Journal of Humane Education | Academy of Prosocial Learning

The Strengths and Weaknesses of Research Methodology: Comparison and Complimentary between Qualitative and Quantitative Approaches | Looi Theam Choy

Tip Sheet on Question Wording | Harvard University Program on Survey Research

Types of Evaluation | Centers for Disease Control and Prevention

What is the Difference Between Formative and Summative Assessment? | Carnegie Mellow University Eberly Center

Why Conduct a Program Evaluation? Five Reasons Why Evaluation Can Help an Out-of-School Time Program | Allison J. R. Metz, Ph.D.